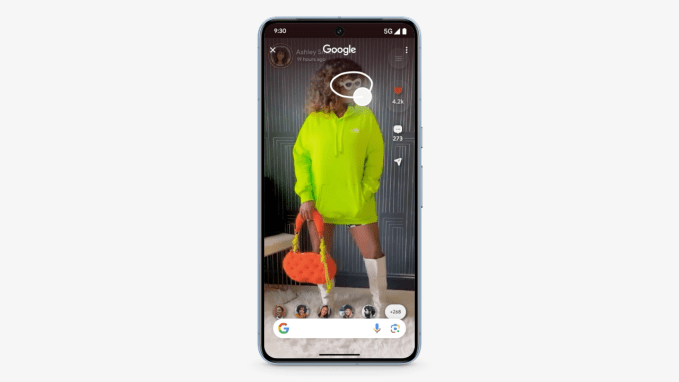

Alongside Samsung’s launch event today, Google announced a new way to search on Android phones dubbed “Circle to Search.” The feature will allow users to search from anywhere on their phone by using gestures like circling, highlighting, scribbling or tapping. The addition, Google explains, is designed to make it more natural to engage with Google Search at any time a question arises — like when watching a video, viewing a photo inside a social app or having a conversation with a friend over messaging, for example.

Image Credits: Google

Circle to Search is something of a misnomer for the new Android capability, as it’s more about interacting with the text or image on the screen to kick off the search…and not always via a “circling” gesture.

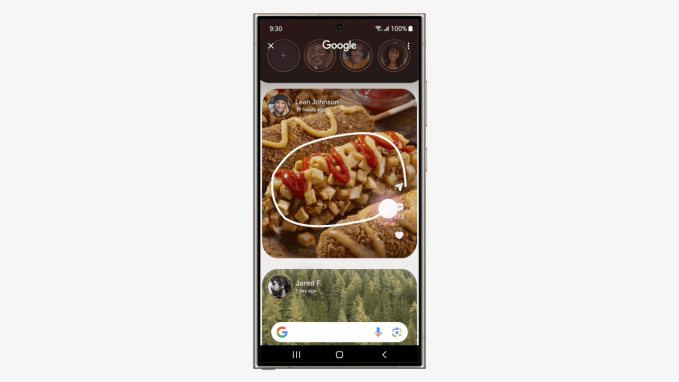

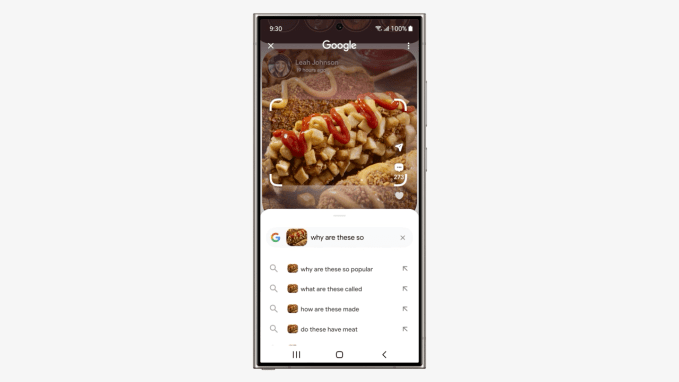

The circling gesture is just one option you can use to initiate a search — such as when you want to identify something in a video or photo. For example, if you’re watching a food video featuring a Korean corn dog, you could ask “Why are these so popular?” after circling the item.

Image Credits: Google

Image Credits: Google

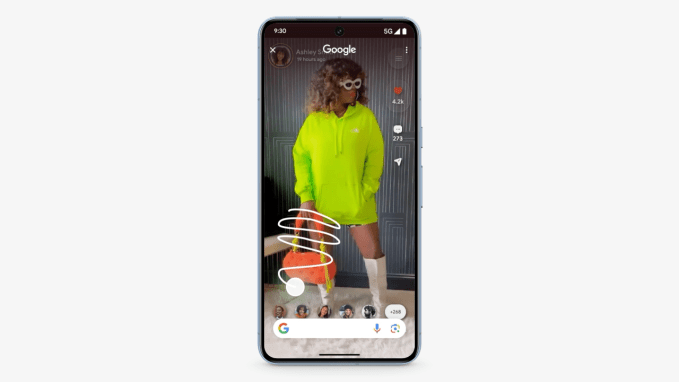

The feature can be engaged through other gestures, as well. If you’re chatting in a messaging app with a friend about a restaurant, you could simply tap on the name of the restaurant to see more details about it. Or you could swipe across a series of words to turn that string into a search, the company explains, like the term “thrift flip” that appears when watching a YouTube Shorts video about thrifting, another example shows.

Image Credits: Google

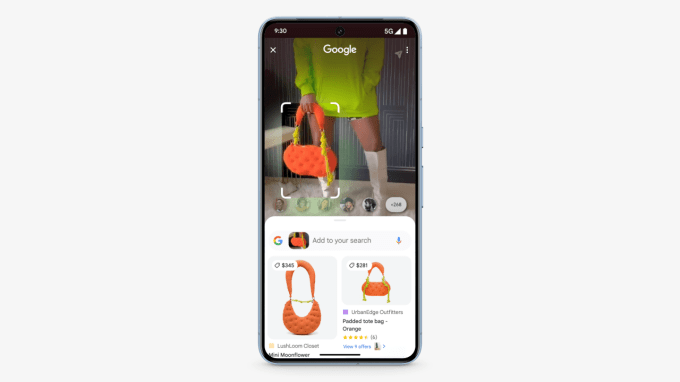

When interested in something visual on your screen, you can circle or scribble across the item. For instance, Google suggests that you could circle the sunglasses a creator wore in their video or scribble on their boots to look up related items on Google without needing to switch between different apps. The scribble gesture can be used on both images and words, Google also notes.

The search results users will see will differ based on their query and the Google Labs products they opt into. For a simple text search, you may see traditional search results while a query that combines an image and text — or “multisearch” as Google calls it — uses generative AI. And if the user is participating in Google’s Search Generative Experience (SGE) experiment offered via Google Labs, they’ll be offered AI-powered answers, as with other SGE queries.

Image Credits: Google

The company believes the ability to access search from any app will be meaningful, as users will no longer have to stop what they’re doing to start a search or take a screenshot as a reminder to search for something later.

However, the feature is also arriving at a time when Google Search’s influence is waning. The web has been taken over by SEO-optimized pages and spam, making it more difficult to find answers via search. At the same time, generative AI chatbots are now being used to augment or even supplant traditional searches. The latter could negatively impact Google’s core advertising business if more people begin to get their answers elsewhere.

Image Credits: Google

Turning the entire Android phone platform into a surface for search, then, is more than just a “meaningful” change for consumers — it’s something of an admission that Google’s Search business needs shoring up by deeper integration with the smartphone OS itself.

The feature was one of several Google AI announcements across Gemini, Google Messages and Android Auto announced at today’s event. It also arrives alongside a new AI-powered overview feature for multisearch in Google Lens.

Circle to Search will launch on January 31 on the new Galaxy S24 Series smartphones, announced today at the Samsung event, as well as on premium Android smartphones, including the Pixel 8 and Pixel 8 Pro. It will be available in all languages and locations where the phones are available. Over time, more Android smartphones will support the feature, Google says.